Exploring STM32N6

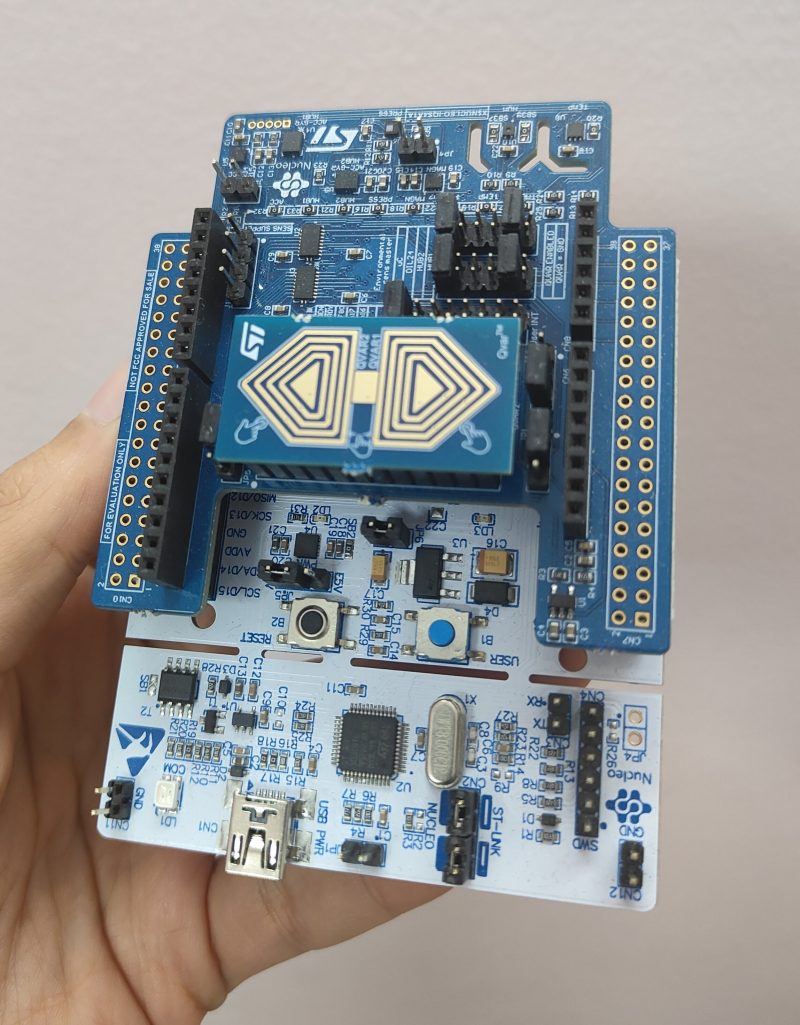

A few months ago, I attended the 𝐒𝐓𝐌32 𝐒𝐮𝐦𝐦𝐢𝐭, a global event where they launched their latest microcontroller series – 𝐒𝐓𝐌32𝐍6. This microcontroller is geared towards AI-ML applications due to its integrated neural processing unit and high-performance core.

After the launch, I immediately got my hands on the STM32N6 discovery kit to dive into the world of Edge ML.

What started as an exploration of Edge ML has now expanded into a broader learning journey of

✅ Camera interfaces

✅ Displays, touchscreens, GUIs and TouchGFX

✅ Azure RTOS, ThreadX and advanced interfaces like USB and ethernet

✅ Audio processing

✅ Advanced microcontroller based hardware with external memories, displays and cameras

I’ve already built a few simple applications using TouchGFX and run multiple ML and computer vision based demo applications.

My current target is to learn camera and display interfacing and integration. Eventually I will work my way up to interfacing audio/visual signals with various ML models to build embedded AI applications.